A stepping stone towards end-to-end encryption on mobile

It has been a while since our first release of end-to-end encryption for the web app and ever since we have tried to enhance and improve it. One of these enhancements was the introduction of The Double Ratchet Algorithm through libolm and automatic key negotiation.

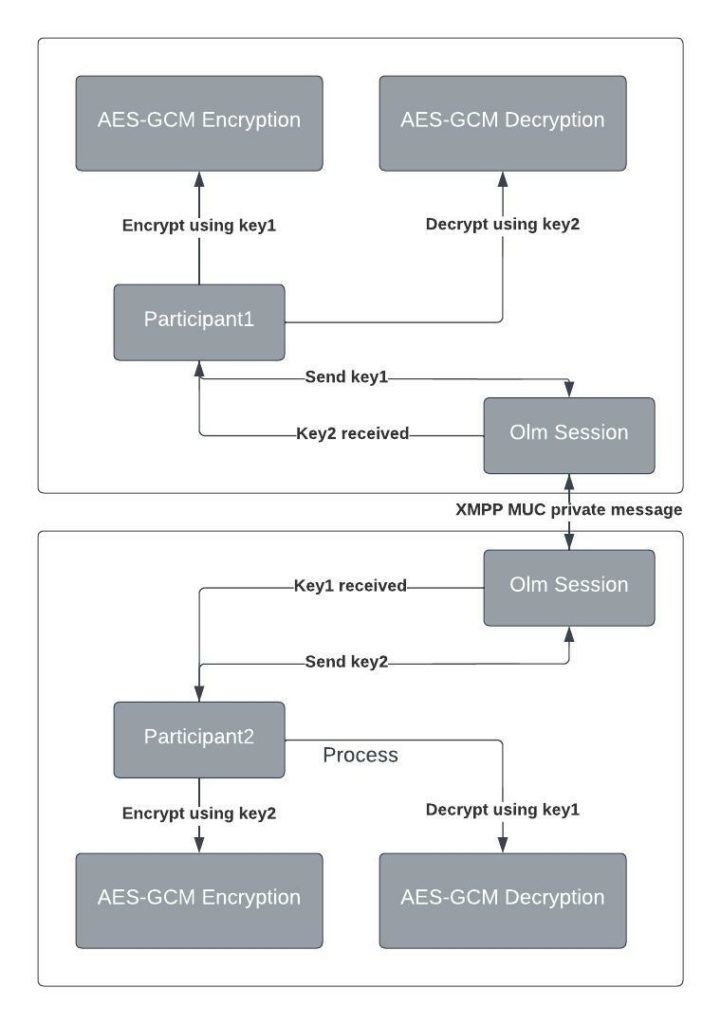

Each participant has a randomly generated key which is used to encrypt the media. The key is distributed with other participants (so they can decrypt the media) via an E2EE channel which is established with Olm (using XMPP MUC private messages). You can read more about it in our whitepaper.

Even though the actual encryption/decryption API is different on web and mobile (“Insertable Streams” vs native Encryptors/Decryptors), the key exchange mechanism seemed like something that could be kept consistent between the two (even three, considering Android and iOS different) platforms. This took us to the next challenge: how can we reuse the JS web implementation of the double ratchet algorithm without any major changes, while also keeping in mind the performance implications it might have on the mobile apps.

Since our mobile apps are based on React Native the obvious solution was to wrap libolm so we could use the same code as on the web, but not all wrappers are created equal.

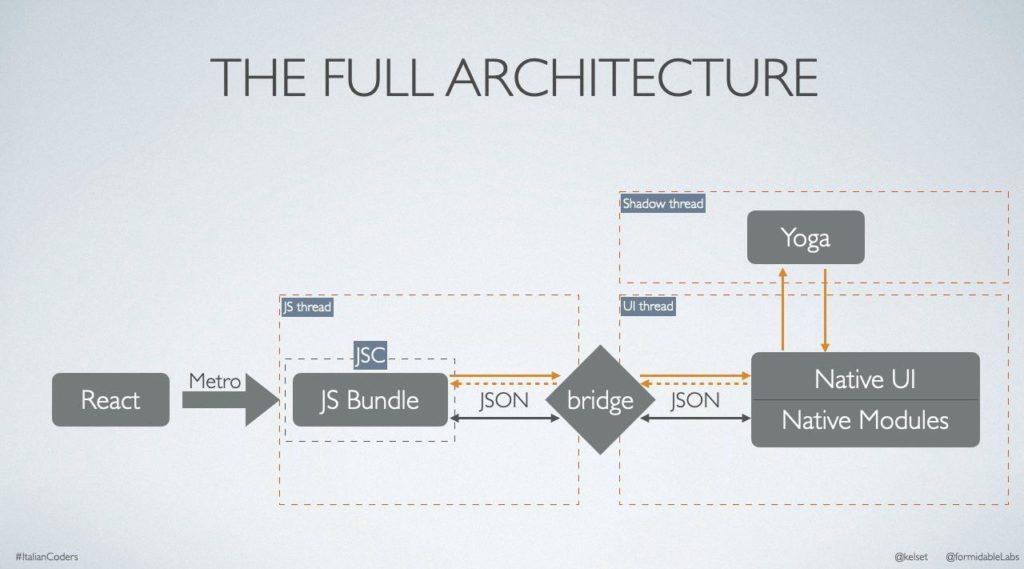

The “classical” approach – using Native Modules

There are three major drawbacks while using this approach:

- Performance – the communication through the bridge can be quite slow and it also requires some very specific data as parameters that would be converted to JSON (serializable, WritableArrays, etc).

- Lots of glue code is required, both on Android and iOS. Also, every future change will have to be implemented both in Java and ObjC.

- Since there are two different threads that run the native and the JS code, the bridge methods are asynchronous, this means that offering synchronous APIs is not possible (without using sync methods, but that is discouraged as it kills performance).

The first issue might not have had such a major impact on this specific use case, since the key exchange happens not too frequently. The fact that every change has to be implemented twice is very likely to be a problem in the future, while the last issue, the asynchronicity of the bridge methods is definitely a showstopper since it would break the consistency of the web and mobile interfaces.

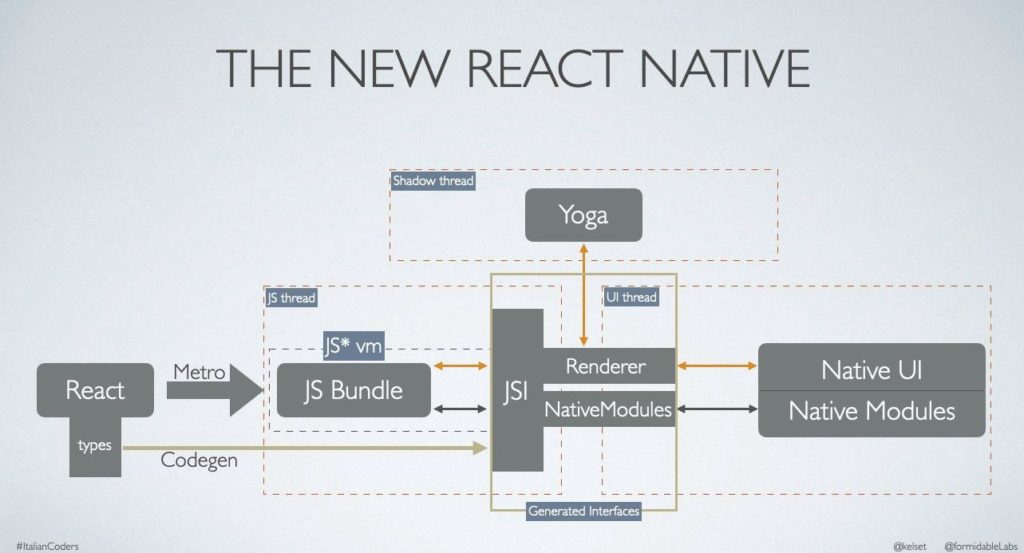

JavaScript Interface – JSI

JavaScript Interface (JSI) is a new layer between the JavaScript engine and the C++ layer that provides means of communication between the JS code and the native C++ code in React Native. Since it doesn’t require serialization is a lot faster than the traditional bridge approach, in addition to allowing us to provide a performant sync API.

As we’ll show in the what follows, it also solves the other two problems the classical approach poses, the implementation has to be done/modified only once (most of the time, since some glue code is still required) and, most importantly, the native methods called thought JSI can be synchronous.

Android

The first challenge was to find the proper way of initializing the C++ libraries and exposing the so-called “host functions” (these are C++ functions callable from the JS code).

For this we took advantage of the mechanism for native modules and the way they are initialized by the RN framework, thus creating OlmModule.java and OlmPackage.java. OlmPackage is just a simple ReactPackage that has as native modules OlmModule.

OlmModule.java

Within the lifecycle of this ReactContextBaseJavaModule, the actual magic happens: loading the C++ libraries and exposing the necessary behavior to the JS side.

The C++ library is loaded inside a static initializer.

Exposing the host functions to the JS is done in the initialize method of the OlmModule, through the JNI native function nativeInstall. This method is implemented in cpp-adapter.cpp, where, besides some JNI-specific code, the jsiadapter::install is called, where the host functions will actually be exposed. It is here where the Android-specific glue code ends, the jsiadapter being platform agnostic, used, as we’ll show, by the iOS as well.

iOS

We also used the iOS native bridge mechanism for initialization, but here the implementation is even easier: Olm.h and Olm.mm contain the module, where, in the setBridge method, jsiadapter::install is called, exposing the host functions.

Exposing the host functions

As stated above, both Android and iOS specific code ends up calling the platform agnostic jsiadapter::install method. It is here where the C++ methods are exposed, i.e. JS objects are set on jsiRuntime.global with methods that call directly into the C++ code.

Object module = Object(jsiRuntime); //…add methods to module jsiRuntime.global().setProperty(jsiRuntime, "_olm", move(module));

This object will be accessible on the JS side via a global variable. For our use case only one object is enough, but it is here where as many objects as necessary can be exposed, without having to change any of the platform specific code.

Adding methods to the exposed object

auto createOlmAccount = Function::createFromHostFunction(

jsiRuntime, PropNameID::forAscii(jsiRuntime, "createOlmAccount"), 0,

[](Runtime &runtime, const Value &thisValue, const Value *arguments,

size_t count) -> Value {

auto acountHostObject = AccountHostObject(&runtime);

auto accountJsiObject = acountHostObject.asJsiObject();

return move(accountJsiObject);

});

module.setProperty(jsiRuntime, "createOlmAccount", move(createOlmAccount));

auto createOlmSession = Function::createFromHostFunction(

jsiRuntime, PropNameID::forAscii(jsiRuntime, "createOlmSession"), 0,

[](Runtime &runtime, const Value &thisValue, const Value *arguments,

size_t count) -> Value {

auto sessionHostObject = SessionHostObject(&runtime);

auto sessionJsiObject = sessionHostObject.asJsiObject();

return move(sessionJsiObject);

});

module.setProperty(jsiRuntime, "createOlmSession", move(createOlmSession));

Two methods are exposed: createOlmAccount and createOlmSession, both of them returning HostObjects.

HostObject

It’s a C++ object that can be registered with the JS runtime, i.e. exposed methods can be called from the JS code, but it can also be passed back and forth between the JS and C++ while still remaining a fully operational C++ object.

For our use case, the AccountHostObject and SessionHostObject are wrappers over the native olm specific objects OlmAccount and OlmSession and they contain methods that can be called for the JS code (identity_keys, generate_one_time_keys, one_time_keys etc. for AccountHostObject, create_outbound, create_inbound, encrypt, decrypt etc. for SessionHostObject).

The way this methods are exposed from C++ to JS is again through host functions, in the HostObject::get method:

Value SessionHostObject::get(Runtime &rt, const PropNameID &sym) {

if (methodName == "create_outbound") {

return Function::createFromHostFunction(

*runtime, PropNameID::forAscii(*runtime, "create_outbound"), 0,

[](Runtime &runtime, const Value &thisValue, const Value *arguments,

size_t count) -> Value {

auto sessionJsiObject = thisValue.asObject(runtime);

auto sessionHostObject =

sessionJsiObject.getHostObject<SessionHostObject>(runtime).get();

auto accountJsiObject = arguments[0].asObject(runtime);

auto accountHostObject =

accountJsiObject.getHostObject<AccountHostObject>(runtime).get();

auto identityKey = arguments[1].asString(runtime).utf8(runtime);

auto oneTimeKey = arguments[2].asString(runtime).utf8(runtime);

sessionHostObject->createOutbound(accountHostObject->getOlmAccount(),

identityKey, oneTimeKey);

return Value(true);

});

}

}

Example:

const olmAccount = global._olm.createOlmAccount(); const olmSession = global._olm.createOlmSession(); olmSession.create_outbound(olmAccount, “someIdentityKey”, “someOneTimeKey”);

As shown, global._olm.createOlmAccount() and global._olm.createOlmSession() will return a HostObject. When calling any method on it (create_outbound in the example) the HostObject::get method will be called with the proper parameters, i.e. the Runtime and the method name, so we use this method name to expose the desired behavior.

Note that the calling HostObject can be fully reconstructed on the C++ side,

auto sessionJsiObject = thisValue.asObject(runtime); auto sessionHostObject = sessionJsiObject.getHostObject<SessionHostObject>(runtime).get();

Parameters can also be passed from JS to C++, including other HostObjects:

auto accountJsiObject = arguments[0].asObject(runtime); auto accountHostObject = accountJsiObject.getHostObject<AccountHostObject>(runtime).get(); auto identityKey = arguments[1].asString(runtime).utf8(runtime); auto oneTimeKey = arguments[2].asString(runtime).utf8(runtime);

Fully consistent interface

As mentioned from the very beginning, keeping the web and mobile interfaces consistent was the main goal, so, after implementing all the necessary JSI functionality, it was all wrapped into some nice TypeScript classes: Account and Session.

Their usages are shown in the example integration that comes with the SDK:

const olmAccount = new Olm.Account(); olmAccount.create(); const identityKeys = olmAccount.identity_keys(); const olmSession = new Olm.Session(); olmSession.create(); olmSession.create_outbound(olmAccount, idKey, otKey);

This is the exact same API that the olm JS package exposes. Mission accomplished!

Next steps

Implementing this RN library that exposes the libolm functionality is just a piece of the bigger mobile E2EE puzzle. It will be integrated in the Jitsi Meet app and used for the implementation of the E2EE communication channel between each participant, i.e. for exchanging the keys.

Key generation

Since the WebCrypto API is not available in RN, we have to expose a subset of the methods for key generation (importing, deriving, generating random bytes) and again we plan to do it through JSI.

Turn out the olm library contains these methods, so it is possible we’ll expose them in the react-native-olm library.

Performing the actual media encryption/decryption

WebRTC provides a simple API that allows us to obtain the same result that we do on the web with “insertable streams”: FrameEncryptorInterface and FrameDecryptorInterface, in the C++ layer.

The encryptor is to be set on an RTPSender, while the decryptor on the RTPReceiver and they will basically act just as a proxy for each frame that is sent/received, making it possible to add logic for constructing/deconstructing the SFrame out of each frame that is sent/received.

The fact that this code will run on the native side is of major importance, since the performance issues caused by the communication between the JS and native would be major in this case, since those operations would have to be done many times a second, for each frame, probably making the audio and video streams incoherent.

The only operations that will be done from the JS side is the enabling of the E2EE, as well as the key exchange steps. We will have to expose the methods for setting the keys for the AES-GCM from the JS to the native FrameEncryptors and FrameDecryptors, most likely using the JSI path.

Oh hey there vodozemac!

While we were busy working on this the good folks over at Matrix have created* vodozemac, a new libolm implementation in Rust and it highly recommends migrating to this SDK going forward. At the moment it only provides bindings for JS and Python, while the C++ is still in progress. We’ll keep a close eye here and update to vodozemac after we have all the pieces in place.

Get it!

You can start tinkering with it today, here is the GitHub repo.

❤️ Your personal meetings team.

Author: Titus Moldovan